Recent events, such as the New Zealand mosque shooting in Christchurch and foreign meddling in the 2016 U.S. election, have made internet content moderation a subject of international concern. To shield users from harmful and misleading content, internet platforms scramble to review billions of uploads each day. A large portion of that content is health-related, and platforms are increasingly choosing to police it.

Facebook recently updated its policies on health-related content. On July 2, the platform announced new tools to reduce the appearance of sensational health claims on the site. According to Facebook, “in order to help people get accurate health information and the support they need, it’s imperative that we minimize health content that is sensational or misleading.”

Platforms as Regulators

As internet platforms such as Facebook increasingly moderate health-related content, they assume the role of public health regulators; they decide what kind of information is healthy and harmful for people to view. This role is typically assumed by government agencies such as state public health departments, the Food and Drug Administration (FDA), and the Centers for Disease Control (CDC). For instance, the FDA regulates the content of drug and medical device advertising, preventing it from making unfounded claims. It is unclear whether internet platforms are qualified to wield such power and whether they can be trusted with it.

With over 2 billion users worldwide, Facebook’s content moderation decisions have far-reaching effects. If its public health decisions are not sound, they could negatively impact the health of millions. Unlike the FDA and CDC whose mission is to defend and maximize public health, the mandate of internet platforms is to maximize shareholder profit.

Facebook’s recent policy changes are not its first attempt at public health regulation. In February, the platform started censoring content it believes promotes self-harm. In March, it cracked down on content that is critical of vaccinations.

Other platforms have taken similar steps to reduce misleading health content: YouTube demonetized “anti-vax” videos, Pinterest banned vaccination-related searches from its platform, and Amazon removed anti-vax related books and documentaries from its site. The crowdfunding sites GoFundMe and Indiegogo banned fundraising campaigns for unproven medical therapies including controversial cancer treatments.

Spreading misinformation regarding childhood vaccinations is harmful and may be associated with a global rise in childhood infectious diseases. Similarly, promoting sham medical therapies can be deadly. However, there are many issues in science and medicine on which there is little consensus. How do internet platforms decide whether content in these fields is trustworthy?

Key Policy Updates

According to its July announcement, Facebook made two key policy updates: “For the first update, we consider if a post about health exaggerates or misleads — for example, making a sensational claim about a miracle cure.” For the second update, Facebook will “consider if a post promotes a product or service based on a health-related claim — for example, promoting a medication or pill claiming to help you lose weight.”

Facebook says it handles this information “in a similar way to how we’ve previously reduced low-quality content like clickbait: by identifying phrases that were commonly used in these posts to predict which posts might include sensational health claims.” Facebook says “this is similar to how many email spam filters work.”

Based on this information, Facebook likely identifies misleading content by using machine learning to find words and phrases that were previously spotted in such content. However, instead of describing how Facebook decides what is sensational or misleading, this explanation merely pushes the inquiry back one step: How did Facebook decide whether the original content (on which its algorithms were trained) was sensational or misleading? Is identifying misleading health content one of those situations where you know it when you see it? Probably not.

In fields such as mental health, nutrition, physical fitness, and preventative medicine, experts often hold widely divergent views. Yet internet platforms are policing these areas as if they can deftly separate medical fact from fiction. For instance, when Facebook took steps to censor content that promotes self-harm, it decided that viewing images of people’s self-inflicted scars would be harmful to others. It subsequently blurred images of user’s healed scars and removed them from search results.

Facebook said that experts it consulted suggested this course of action. However, an independent group of experts on self-harm claim that Facebook’s actions might do more harm than good. Specifically, censoring images of people’s scars might reinforce users’ sense of shame and drive them to harm themselves. Moreover, it could stigmatize and further marginalize people with mental health conditions.

Censoring the Scientific Process

In addition to harming users of internet platforms, censoring health-related content might inhibit citizen science, hinder community reporting of adverse drug events, and prevent alternative therapies from becoming mainstream. For example, there is ongoing public debate surrounding an herbal remedy called kratom. According to the FDA, kratom is dangerous and has no accepted medical benefits. Yet an objective review of the evidence does not support that conclusion. Deaths attributed to kratom were likely due to the consumption of other substances, such as illicit opioids, and thousands of people claim kratom helps them cope with symptoms of chronic pain, post-traumatic stress disorder, and opioid use disorders.

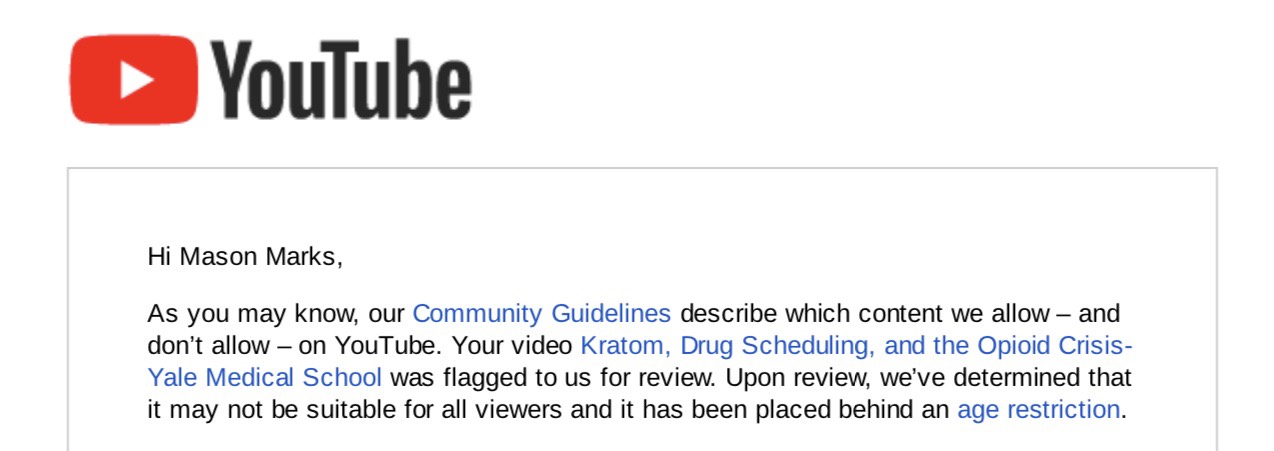

If a Facebook user posts the following message: “kratom helped treat my back pain!” should Facebook categorize that sentence as misleading and censor it since the FDA claims kratom has no medical applications? Clearly it should not. However, at least one platform is censoring kratom-related content: YouTube “age restricted” my educational video Kratom, Drug Scheduling, and the Opioid Crisis – Yale Medical School. Age restriction is a form of censorship that makes content invisible to viewers who are logged out, under 18, or have YouTube’s “restricted mode” enabled. Restricted mode is used by schools, libraries, and public institutions to limit viewing of potentially objectionable content.

The discussion of emerging medical treatments like kratom on internet platforms is part of the scientific process. Though far from constituting hard clinical evidence, such discussions allow people with health conditions to share their experiences and discuss the risks and benefits of medical treatments. Those discussions may generate interest among researchers who can then conduct trials to gather stronger evidence of medical benefit. The censorship of health-related content by internet platforms threatens this process.

In the future, it may be necessary to develop tools to measure the veracity of health claims. However, right now, it is likely impossible to do so reliably. Even if such a system was feasible, it may not be desirable, and it is unclear who would be qualified to implement it. Though not infallible or impervious to outside pressures, scientifically-grounded agencies such as the CDC and FDA are better choices than Facebook and Google.